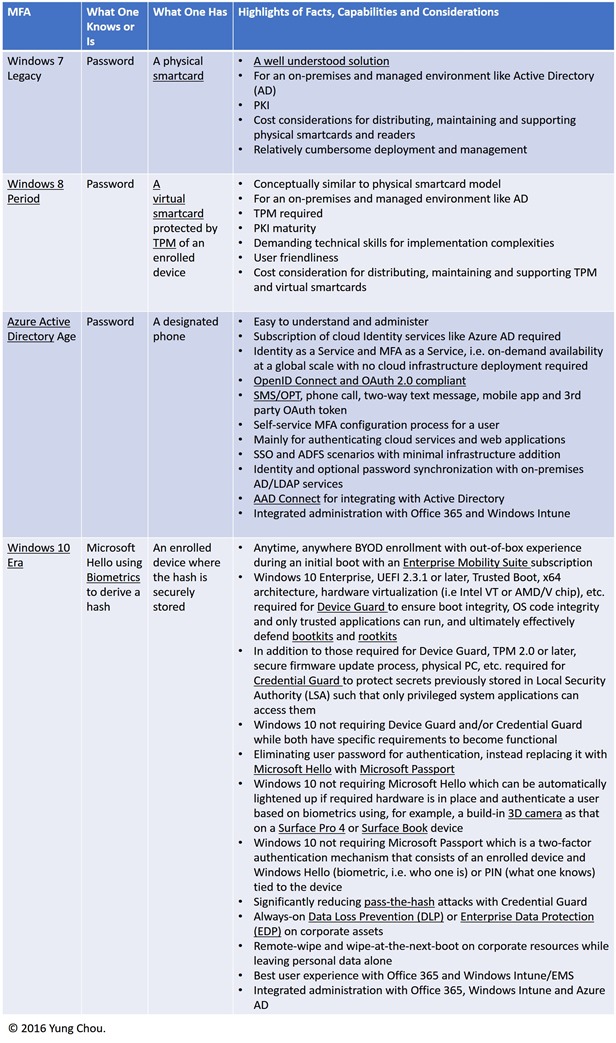

I am starting a series of Windows 10 contents with much on security features. A number of topics including Multi-Factor Authentication (MFA), hardware- and virtualization-based securities like Credential Guard and Device Guard, Windows as a Service are all included in upcoming posts. These features are not only signature deliveries of Windows 10, but significant initiatives in addressing fundamental issues of PC security while leveraging market opportunities presented by a growing trend of BYOD. The series nevertheless starts with where all security discussions should start, in my view.

Password It Is

At a very high level, I view security encompassing two key components. Authentication is to determine if a user is sad claimed, while authorization grants access rights accordingly upon a successful authentication. The former starts with a presentation of user credentials or identity, i.e. user name and password, while the latter operates according to a security token or a so-called ticket derived based on a successful authentication. The significance of this model is that user’s identity, or more specifically a user password since a user name is normally a display and not encrypted field, is essential to initiate and acquire access to a protected resource. A password however can be easily stolen or lost, and is arguably the weakest link of a security solution.

Using the Same Password for Multiple Sites

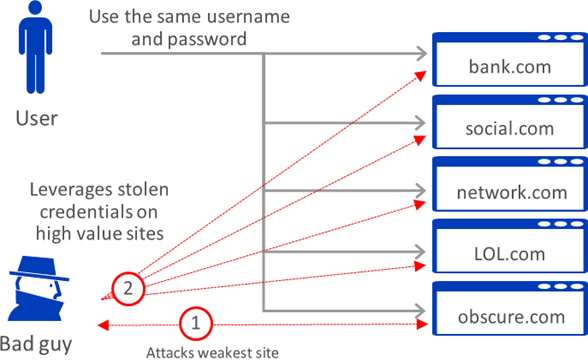

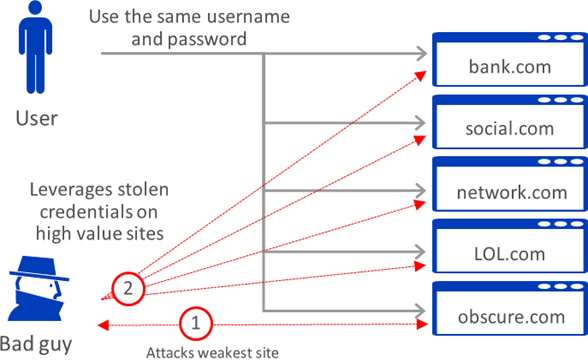

When it comes to cyber security, the shortest distance between two points is not always a straight direct line. For a hacker to steal your bank account, the quietest way is not necessarily to directly hack your bank web site, unless the main target is the bank instead. Since institutions like banks and healthcare providers, for example, are subject to laws, regulations and mandates to protect customers’ personal information. These institutions have to financially and administratively commit and implement security solutions, and attacking them is a high cost operation and obvious much difficult effort.

An alternative, as illustrated above, is to attack those unregulated businesses, low profile, lesser known and mom-and-pop shops where you perhaps order groceries, your favorite leaf teas and neighborhood deliveries as a hacker learned your lifestyle from your posting, liking and commenting on subjects and among communities in social media. Many of those shops are family own businesses, operating on a string budget, and barely with enough awareness and technical skills to maintain a web site with freeware download from some unknown web site. The OS is probably not patched up to date. If there is antivirus software, it may be a free trail and have expired. The point is that for those small businesses the security of the computer environment is properly not an everyday priority, let alone a commitment to protect your personal information.

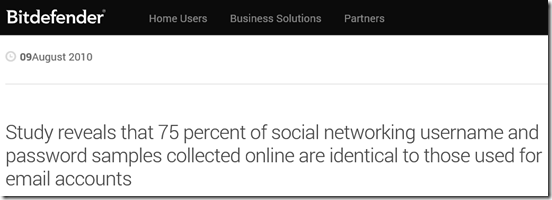

The alarming fact is that many do use the same password for accessing multiple sites.

Bitdefender published a study in August of 2010, as shown above, and revealed more than 250,000 email addresses, usernames and passwords can be found easily online, via postings on blogs, collaboration platforms, torrents and other channels. And it pointed out that “In a random check of the sample list consisting of email addresses, usernames and passwords, 87 percent of the exposed accounts were still valid and could be accessed with the leaked credentials. Moreover, a substantial number of the randomly verified email accounts revealed that 75 percent of the users rely on the same password to access both their social networking and email accounts.”

On April 23, 2013, Ofcom published that (as shown above) “More than half (55%) of adult internet users admit they use the same password for most, if not all, websites, according to Ofcom’s Adults’ Media Use and Attitudes Report 2013. Meanwhile, a quarter (26%) say they tend to use easy to remember passwords such as birthdays or names, potentially opening themselves up to the threat of account hacking.” As noted, this was based on 1805 adults aged 16 and over were interviewed as part of the research. Although the above statistics are derived from surveying UK adult internet users, it does represent a common practices in internet surfing and raises a security concern.

Convenience at the Risk of Compromising Security

With free Wifi, our access to Internet and getting connected is available at coffee shops, bookstores, shopping malls, airports, hotels, just about everywhere. The convenience comes with a high risk nevertheless since these free accessing points are also available for hackers to identify, phish and attack targets. Operating your account with a public Wifi or using a shared device to access protected information is essentially inviting an unauthorized access to invade your privacy. Using the same password with multiple sites further increases the opportunities to possibly compromise high profile accounts of yours via a weaker account. It is a poor and potentially a costly practice with devastating results, while choosing convenience at the risk of compromising security.

With free Wifi, our access to Internet and getting connected is available at coffee shops, bookstores, shopping malls, airports, hotels, just about everywhere. The convenience comes with a high risk nevertheless since these free accessing points are also available for hackers to identify, phish and attack targets. Operating your account with a public Wifi or using a shared device to access protected information is essentially inviting an unauthorized access to invade your privacy. Using the same password with multiple sites further increases the opportunities to possibly compromise high profile accounts of yours via a weaker account. It is a poor and potentially a costly practice with devastating results, while choosing convenience at the risk of compromising security.

User credentials and any Personally Identifiable Information (PII) are valuable asset and what hackers are looking for. Identifying and protecting PII should be an essential part of a security solution.

Fundamental Issues with Password

Examining the presented facts of using password surfaces two issues. First, the security of a password much relies on a user’s practice and is problematic. Second, a hacker can log in remotely from other states or countries with stolen user credentials with a different device. A direct answer to these issues includes to simply not use password, instead with something else like biometrics to eliminate the need for user to remember a string of strange characters. And associate user credentials with a user’s local hardware, so that the credentials are not applicable with a different device. Namely employ the user’s device as a second factor for MFA.

Closing Thoughts

Password is the weakest link in a security solution. Keep it complex and long. Exercise your common sense in protecting your credentials. Regardless you are winning a trip to Hawaii or $25,000 free money, do not read those suspicious email. Before clicking a link, read the url in its entirety and make sure the url is legitimate. These are nothing new and just a review of what we learn in grade school of computer security.

Evidence nevertheless shows that many of us however tend to use the same passwords for multiple sites as passwords increase and are complex and hard to remember. And the risk of an unauthorized access becomes high.

For IT, eliminate password and replace with biometrics is an emerging trend. Implementing Multi-Factor Authentication needs to be sooner than later. Assess hardware- and virtualization-based securities to fundamentally design out rootkit and ensure hardware boot integrity and OS code integrity should be a top priority. These are the subjects to be examined as this blog post series continues.

Deploying Azure VM and setting Boot Diagnostics as disabled

Deploying Azure VM and setting Boot Diagnostics as disabled